A New Feature for Plan Study Scoring

Background on Plan Scoring

As you know, each ProKnow Plan Study is configured with a customized, objective plan scoring algorithm. The details of the algorithm are defined by the members of the clinical planning team organizing each specific plan study.

In general, this algorithm will:

- Identify the list of all the pertinent metrics to be extracted, scored against goals, and studied,

- Set a unique score function per metric (set of rules/objectives to reward success and penalize failure), and

- Generate an overall composite plan score over all metrics.

The scoring details, as well as an online scoring engine, are provided to all users.

Recent Confusion over Terminology

Plan studies have been around for a decade now, and first got their start as “Plan Challenges” organized by a third-party planning company called Radiation Oncology Resources (ROR). The program went fully cloud-based in 2015 via ProKnow Quality Systems. By now there have been over 20 international, free public plan studies, each using a plan scoring algorithm designed specifically for that study.

In a recent study (TROG 2019: SBRT Pancreas), a small group of people contacted ProKnow to express confusion about the metrics of that study. Namely, they did not realize that it was not critical to meet the “minimal requirement objective” (i.e., defined as the threshold at which you start earning points) for each and every metric. In fact, some lower-priority (lower-weighted) metrics could be ignored altogether if more quality points could be gained by maximizing some other higher-priority metric. The weights assigned by the clinical planning teams dictate the priority, and — as is typical in the real world — the planner has to decide the tradeoffs in order to achieve the highest possible score for a practical, deliverable plan.

In particular, this SBRT Pancreas study had three metrics for the duodenum organ-at-risk (OAR):

- The volume (cc) of the OAR receiving 20 Gy or more, with a weight of 5 points (out of 150 total),

- The volume (cc) of the OAR receiving 30 Gy or more, with a weight of 3 points, and

- The dose (Gy) covering the hottest 0.5 cc of the OAR, with a weight of 2 points.

Some of the top scoring planners realized that they could eke out a higher total score if they decided not to try to achieve the low priority, 2-point metric, and instead to achieve max points for other high weight metrics such as target coverage metrics. And that was a fine strategy, as the 2-point duodenum metric was certainly not critical. However, many were confused and thought they had to achieve the minimal requirement for each metric, despite the weight, a confusion centered around the unclear terminology of minimal requirement (or, as labeled on the scorecards, “Min Req”).

We’re sorry for that confusion! And, in fact, we have implemented a new feature for future plan studies to avoid this confusion and to improve the flexibility of plan scoring.

New: The Option to Define Metrics as “Critical”

In ProKnow Plan Studies, you can now have the option to define any subset of metrics as “Critical” which will require that their “Min Req” (lower threshold objective) be achieved. If the lower threshold objective is not met for any critical metric, then the composite plan score will be set to zero regardless of the sum of all the other metric scores.

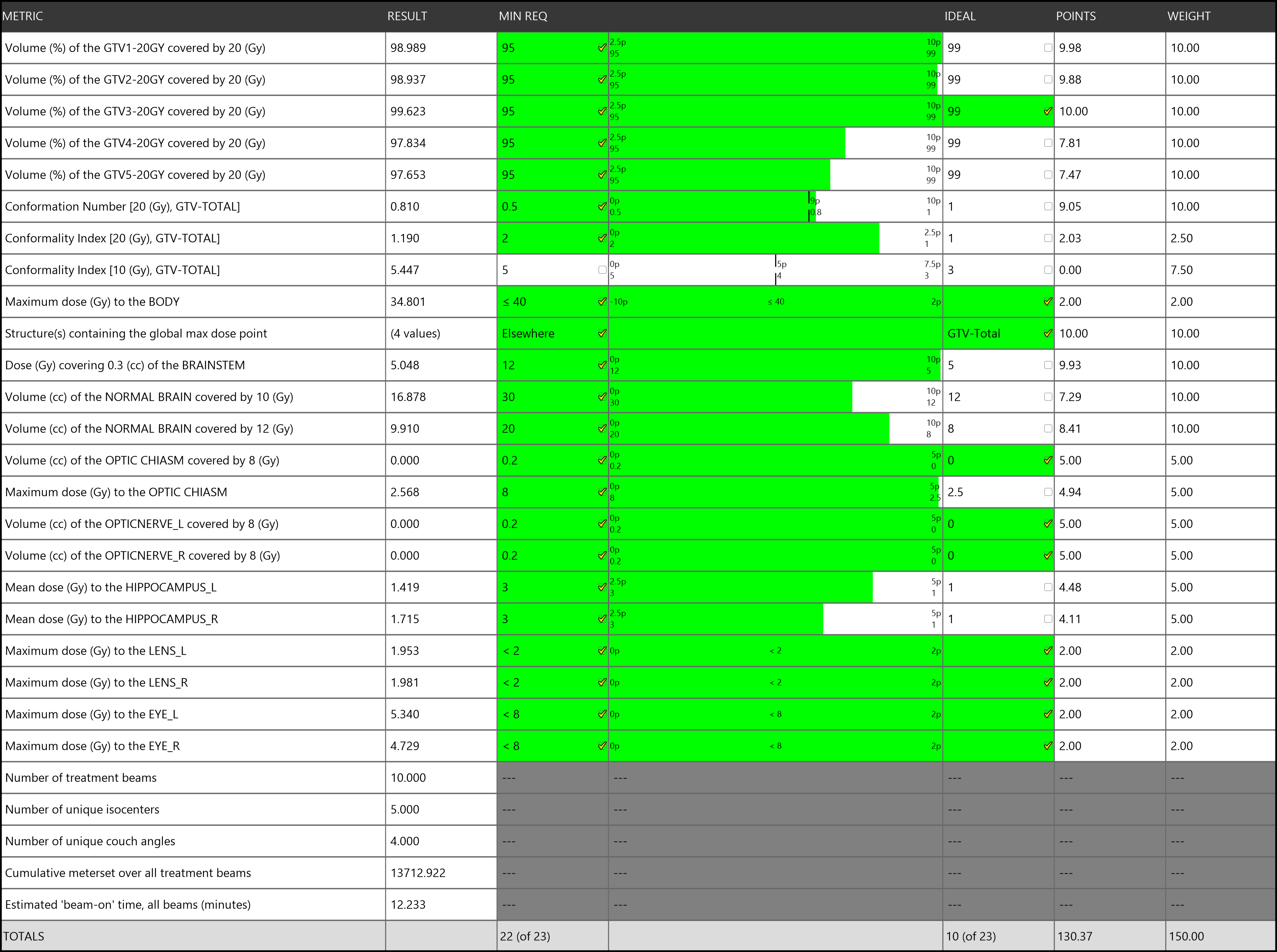

As an example, let’s consider another plan study: the 2018 TROG SRS Brain plan study. Figure 1 shows an example scorecard from that study. Notice that one metric (a Conformality Index calculation) had a score of zero because the resulting value did not at least meet the minimal requirement for gaining points. As a result, that plan lost all 7.5 points allotted to that metric.

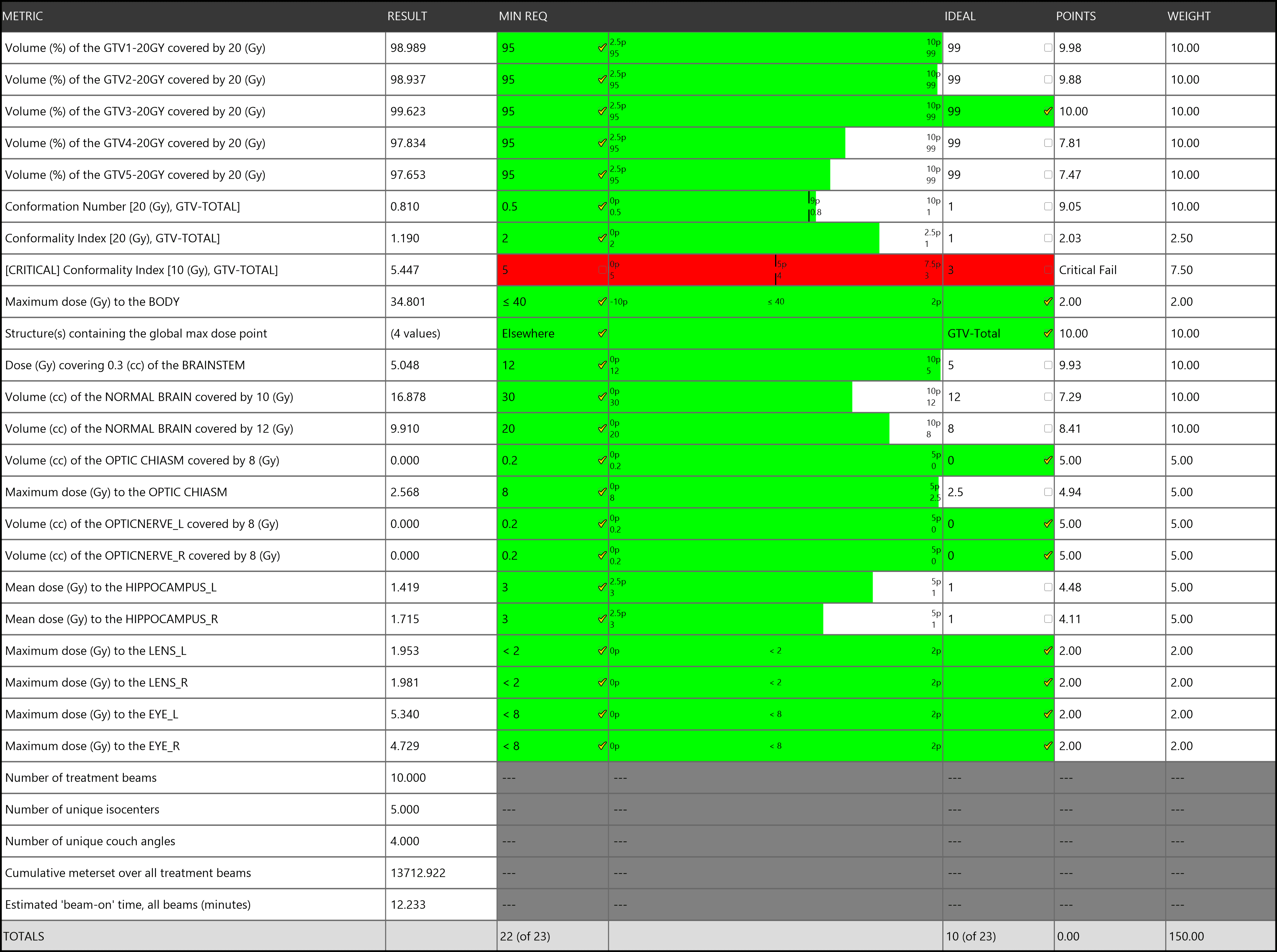

Now, imagine if a subset of metrics — including this Conformality Index — were set to be “Critical” metrics? Then, instead of just losing 7.5 points, the whole composite plan score would be automatically set to zero to alert the user that they must at least achieve the minimal requirement for each critical metric. This scorecard for the same plan would now appear like Figure 2. Notice how the composite plan score is zero.

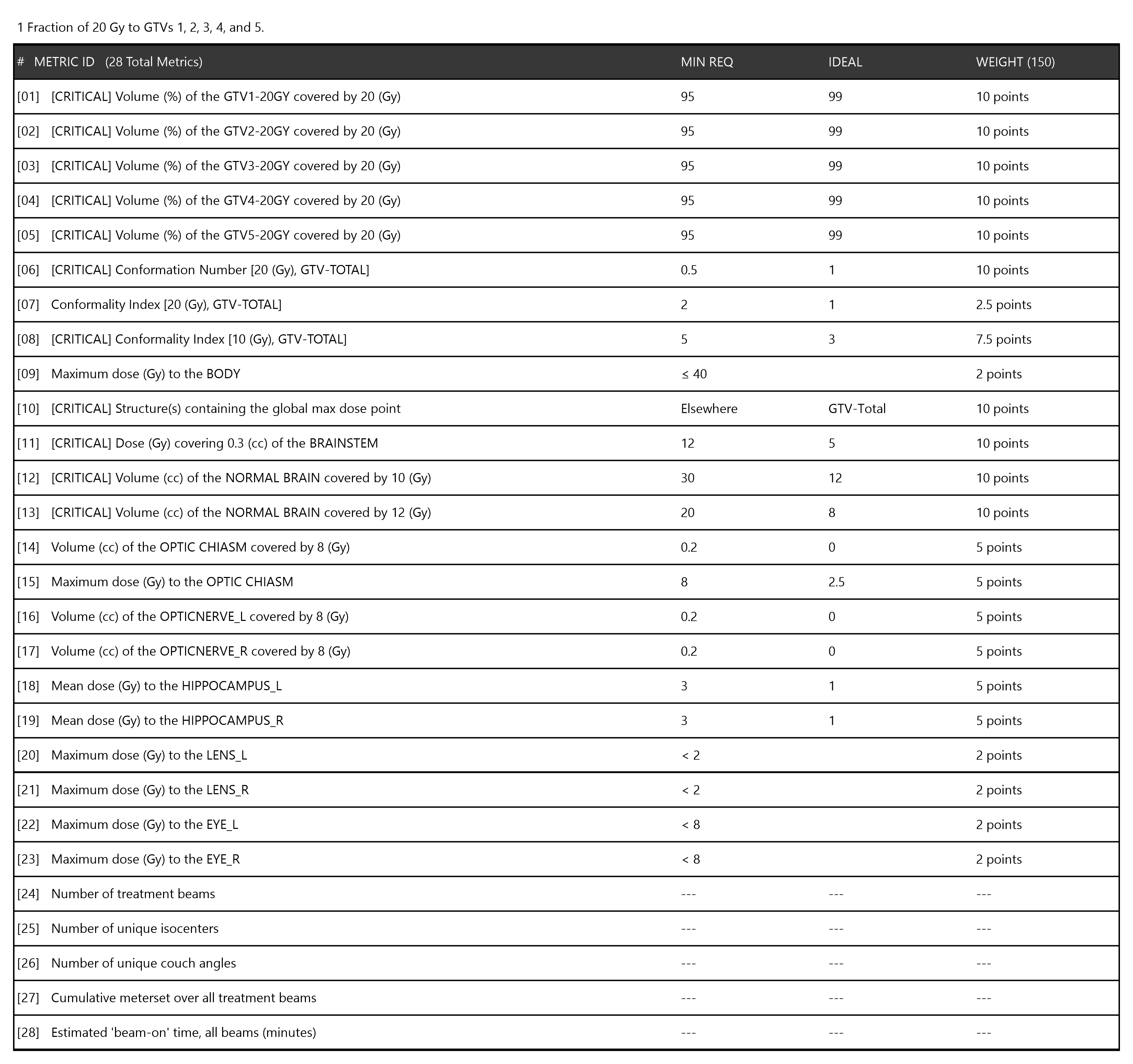

How will participants know which metrics, if any, have been defined as critical? Well, in addition to seeing their scores in real-time to see results of failing a critical metric, they will also be clearly labeled in the plan quality algorithm summary provided with each plan study. An example is shown in Figure 3.

When Will this Feature First Be Used?

The ability to tag metrics as “critical” is available now, so the clinical planning teams for any future public or private plan study can opt to use it. Likewise, the managers for any existing, ongoing plan studies (e.g., those used as part of advanced certification programs or in the curriculum for dosimetry schools) can request to have a subset of metrics in each of those studies to be set to critical.

Figure Legends